After having upgraded our mirror server from Fedora 17 to Fedora 19 two weeks ago I was curious to try out bcache. Knowing how important filesystem caching for a file server like ours is we always tried to have as much memory as "possible". The current system has 128GB of memory and at least 90% are used as filesystem cache. So bcache sounds like a very good idea to provide another layer of caching for all the IOs we are doing. By chance I had an external RAID available with 12 x 1TB hard disc drives which I configured as a RAID6 and 4 x 128GB SSDs configured as a RAID10.

After modprobing the bcache kernel module and installing the necessary

bcache-tools I created the bcache backing device and caching device

like it is described

here.

I then created the filesystem like I did it with our previous RAIDs. For

RAID6 with 12 hard disc drive and a RAID chunk size of 512KB I used

mkfs.ext4 -b 4096 -E stride=128,stripe-width=1280 /dev/bcache0.

Although I am unsure how useful these options are when using bcache.

So far it worked pretty flawlessly. To know what to expect from

/dev/bcache0 I benchmarked it using bonnie++. I got 670MB/s for

writing and 550MB/s for reading. Again, I am unsure how to interpret

these values as bcache tries to detect sequential IO and bypasses the

cache device for sequential IO larger than 4MB.

Anyway. I started copying my fedora and fedora-archive mirror to the bcache device and we are now serving those two mirrors (only about 4.1TB) from our bcache device.

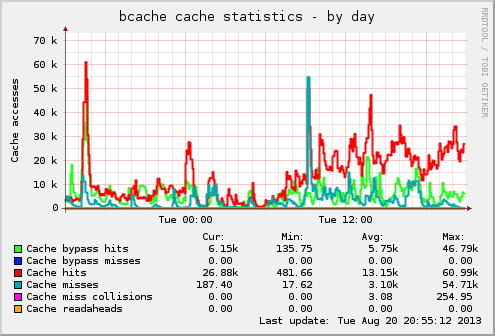

I have created a munin plugin to monitor the usage of the bcache device and there are many cache hits (right now more than 25K) and some cache misses (about 1K). So it seems that it does what is supposed to do and the number of IOs directly hitting the hard disc drives is much lower than it would be:

I also increased the cutoff for sequential IO which should bypass the cache from 4MB to 64MB.

The user-space tools (bcache-tools) are not yet available in Fedora (as far as I can tell) but I found http://terjeros.fedorapeople.org/bcache-tools/ which I updated to the latest git: http://lisas.de/~adrian/bcache-tools/

Update: as requested the munin plugin: bcache

{width="107"

height="185"}

{width="107"

height="185"}